Illustration 2000s

It’s been one heck of a decade for the discipline of illustration, and that’s certainly not an overstatement. To those creatives whose careers straddle the solast- century and noughties divide, it’s clear how much ground the discipline has covered – and how much it’s conquered – since the year 2000.

Over the last 10 years, this field has undergone a huge reinvention from cottage industry to creative industry. Thanks to a fresh breed of practitioners at the turn of the century, with new working methodologies and ideologies for contemporary illustration practice, an outmoded and outdated analogue craft has been dragged into the brave new digital world of the 21st century.

In charting the fall and rise, the near-death experience and radical rebirth of illustration from the mid- 1990s to 2000, it’s evident that renegade innovators were at the heart of instigating genuine and challenging change. At the beginning of the 21st century, only moments away from the final nail being hammered into the coffin, a new kind of creative started a wave of illustration that would determine and define the discipline’s future, and defy those who were ready to perform its last rites.

RECOMMENDED VIDEOS FOR YOU…

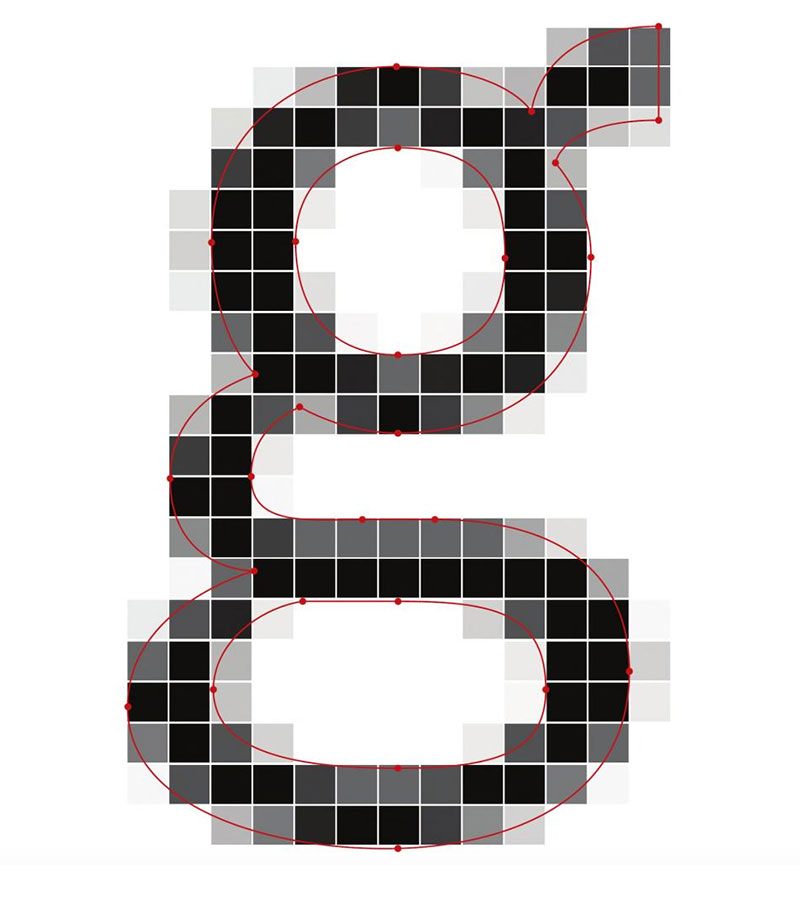

As for the tipping point – was there a moment in time when technology and ideology were in perfect harmony? Probably not, but both certainly played a role in the rejuvenation of illustration. Its changing fortune came about through a series of seemingly unrelated moments, when a new generation of young digital practitioners ran with – rather than away from – technology. Having grown up with the computer in the playroom, classroom and bedroom, they embraced the possibilities it offered for pushing new parameters, instead of remaining tied down by traditions.

While this was going on, the democratisation of the digital was also in full swing: kit, both hardware and software, was available to buy from out-of-town warehouses at rock-bottom prices. And around the same time, the internet arrived into studios up and down the land – albeit via 56k dial-up – for the first time enabling information and communication to be shared across the planet. Marshall McLuhan’s ‘global village’, a term that he first coined in 1962, had finally become a reality.

Ian Wright, a rare example of an ever-evolving illustrator to have continually practised across three decades, cites the internet as the major development for the discipline. “It has allowed self-publishing and digital viewing in a way unheard of before,” he says. And Anthony Burrill, no amateur himself, agrees: “The biggest advance this decade for me has been the internet and instant access to everything, all the time. It means I use it constantly for image research and when I’m looking for inspiration,” he says.

Yet aside from a small minority to jump the analogue/digital divide, as Wright and Burrill so visibly achieved, the future of the discipline was to rest with, and be wrestled by, a new generation. And the outlet for their first forays into reshaping the future? The Face: a fashion, music and style magazine originally launched back in 1980 with illustration content provided by a young Ian Wright. Constantly reinventing itself, some 20 years later the publication was to offer illustration a much-needed blank canvas. By the year 2000, and under the creative guidance of Graham Rounthwaite (himself a successful illustrator-turned-art director), the likes of Jasper Goodall, Miles Donovan at Peepshow and Austin Cowdall at NEW were given carte blanche to explore new illustrative opportunities within the pages of the publication.

“I don’t think I’d be the artist I am today if I hadn’t worked with Graham,” says Goodall. “He was a great art director who knew the value of letting artists do their own thing. We kind of came together at a time when what I was doing creatively totally fitted with what he wanted for the magazine, so he pretty much left me to it with almost zero amendments for three or four years. The Face was the bible of cool,” he continues, “and that was the best place for me to be in terms of media industry perception – it got me a lot of work.”

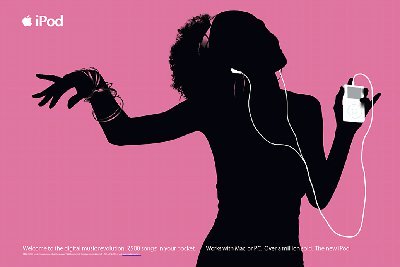

Adrian Johnson, much applauded for his work for clients that include The Independent, The Guardian, Robinsons and Stussy, agrees with Goodall: “The Face helped illustration break free of the shackles of the Radio Times and ES Magazine covers that it was synonymous with – illustration became cool. It’s now everywhere, from the printed page to the white walls of Berlin galleries, and from the Milan catwalk to the world wide web,” he says. “Illustration fought long and hard to be viewed as an equal to graphic design, but the blurring of the boundaries has given the discipline the shot it needed – credibility.”

If it was The Face that began to give illustration the confidence boost it so desperately required at the start of the decade, then it was the strut and last-gang-in-town mentality of a small number of collectives emerging from the shadows – Big Orange, Neasden Control Centre, Peepshow and Black Convoy – that drove the discipline further forward, and greatly helped to expand the overall remit of the illustrator.

Peepshow’s influence, since its formation following Miles Donovan’s graduation from the University of Brighton in 2000, should not be underestimated. Having constantly stood at the forefront of evolving illustration practice, and, just as crucially, reshaped the media’s comprehension of the role of the illustrator, Peepshow has continued to break down barriers and preconceptions about the discipline. “We work extensively within art direction, advertorial and editorial illustration, moving image, fashion and textile design, and set design,” explains Donovan – and it’s at the crossroads of these areas that new practices continue to emerge.

Naked ambition and raw desire to succeed saw John McFaul – a founding member of collective Black Convoy – split to set up McFaul, his own fully-fledged design/illustration agency, which has built up an international client base. “Illustrator became illustrators, then an art and design agency, and a bumbling creative mess became a tight business with a sense of purpose and more than a little swagger,” he admits.

With a portfolio that includes large-scale projects for clients such as Carhartt, Nokia, Havaianas and John Lennon Airport, McFaul can be forgiven for a touch of arrogance. “I’m smiling from breakfast to beers. Can there be a better job on the planet?” he asks.

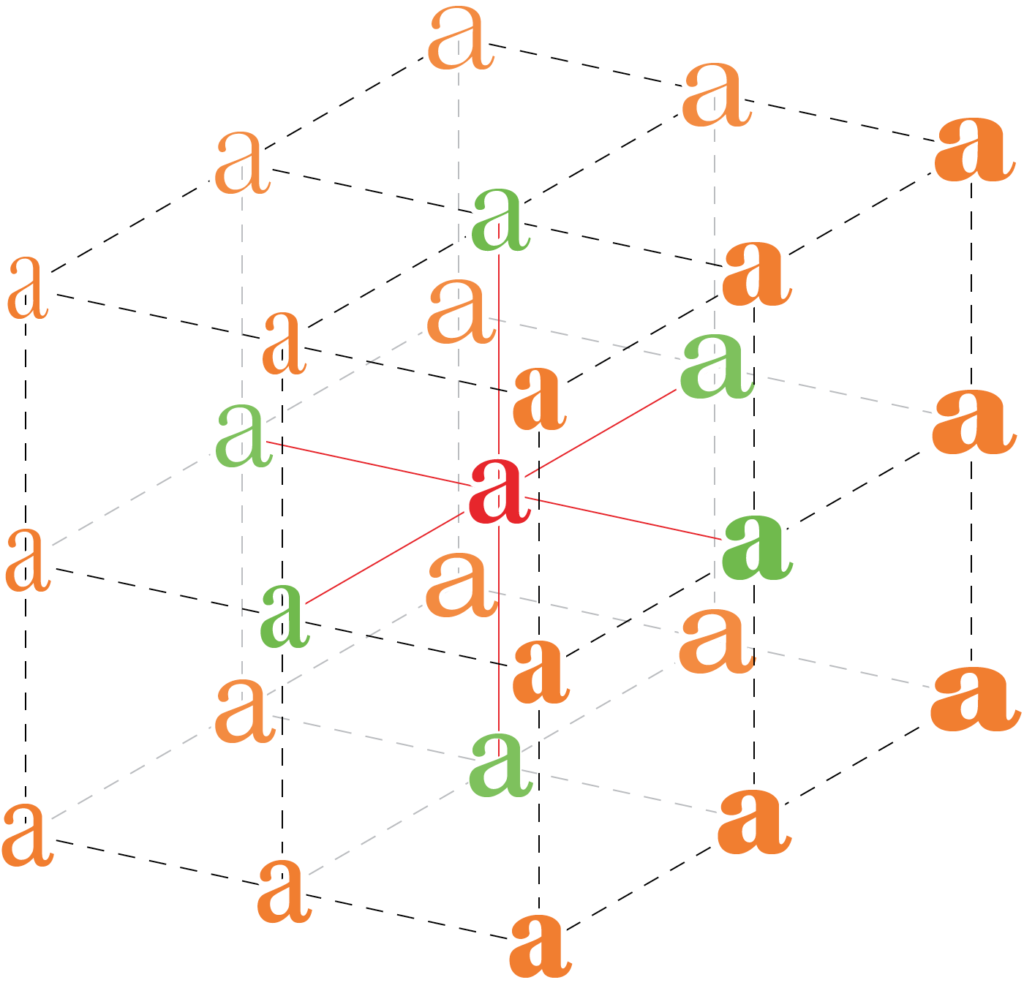

Michael Gillette, illustrator to the Beastie Boys, Levi’s and James Bond (to pick just a few names from his ever-expanding client list) thinks not. From Gillette’s studio in San Francisco – he relocated from London at the start of the decade – he explains his own take on the developments that have been seen since the end of the last century. “It’s clear that we’ve had an explosion of illustrative creativity in the past 10 years, in all different directions and across many different media. I believe that the breadth is enough to ensure that illustration will remain a very viable creative solution and not a fad,” he says. “I believe that the digital realm has put designers and illustrators back on the same page – or screen – so developments will continue in a positive and dynamic fashion.”

Throughout the decade, illustration has become noticed on an increasingly global scale, as a result of enhanced communication through the web. Commercial markets have opened for practitioners in ways that were unthinkable a decade ago. Gradually the eyes of the media industries began to look further afield than the UK and US for new illustration talent, and groups such as eBoy in Germany and :phunk studio in Singapore came under the spotlight.

With corporate companies like Coca-Cola, Nike and Levi’s waiting in line to work with the pick of the bunch, some illustration outfits started to attain cult status. eBoy, comprising an ex-bricklayer, an electrician and a musician, created entirely digital pixelated worlds that couldn’t fail to impress – millions of pixels arranged to create hyper-worlds that pointed towards a Utopian urban dream.

Meanwhile, in Singapore :phunk studio (another truly global collective) were merging art and design, turning their hands to a never-ending range of projects. From skateboards, bikes, vinyl figures, bags, club interiors and exhibitions to retail graphics and design for print and screen, all were subjected to the :phunk studio visual approach.

“We get bored easily,” they explain. “We like to explore, express and communicate through different media.” Today, illustration is a wholly international discipline, manifesting itself through a seemingly never-ending array of outlets compared to the canvas it had in 2000.

So what and where next for illustration? Ambitious for the discipline, Holly Wales champions the new role of the illustrator, but certainly isn’t letting recent progress stand in the way of further transformations in the industry. “I’d like to see much more collaboration – a huge embrace of technology and illustrators behaving more like art directors on bigger projects,” Wales says.

Others are beginning to see the future for illustrators as animators: “As magazines increasingly go online,” says Jasper Goodall, “so the opportunities for non-static illustration increases.” Adrian Johnson is in agreement: “It appears, and I say this with some regret, that the printed page has had its day, and yet the possibilities for illustration online are astonishing. Illustrators will have to get their heads round animation, as illustration comes alive!”

Changes in technology and ideology have transformed and continue to transform contemporary illustration, with emerging practitioners keen to continue to push at the blurred edges of existing boundaries. Rose Blake is a recent graduate of Kingston University’s BA Illustration and Animation course, and is now in her first year of study on a Communication Art and Design MA course at the Royal College of Art. Blake was only 13 years old in 2000, and yet she captures the spirit of today’s young mavericks: “I’ve learnt that you have to be human and put that into your work,” she says. “I make work about stuff that really means something to me – I like honest work.”

Anthony Burrill, despite having been around the block a few times, agrees wholeheartedly with Blake. “Tread your own path,” he advises. “Be aware of everything that’s going on, but find your own voice.” And Ian Wright, from his studio in NYC and with a career spanning three decades, offers sage advice for the newcomer: “The future is always uncertain, yet look at it as a positive challenge and, above all, stick to it and keep the faith!”

We look forward to seeing what the next 10 years have in store: here’s to another heck of a decade for illustration.

__________

While the word “illustrator” might bring to mind a children’s book artist, these five illustrators graduated from storybooks decades ago. Thanks to the ever-growing arsenal of available digital media creation tools, the world of illustration has undergone a major transformation over the year

Today’s illustrators blend traditional and digital media to create artwork for magazines, books, advertisements, movies and more. While they use many of the same tools, each illustrator has an entirely different style. Keep reading to see each artist’s unique take on 21st century illustration.

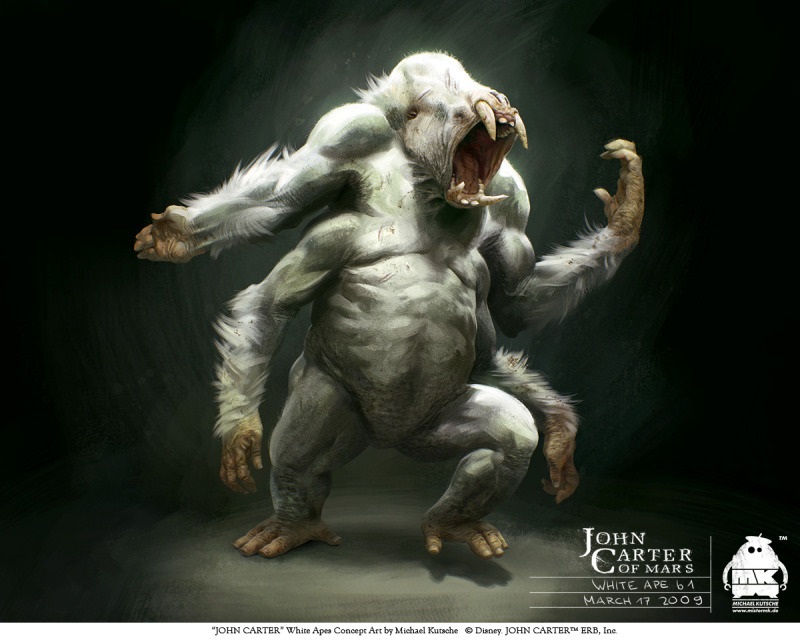

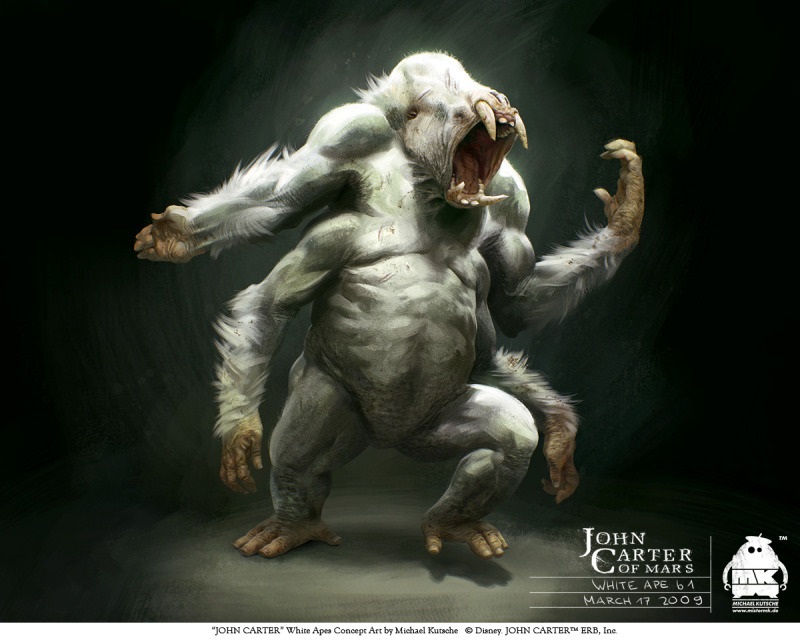

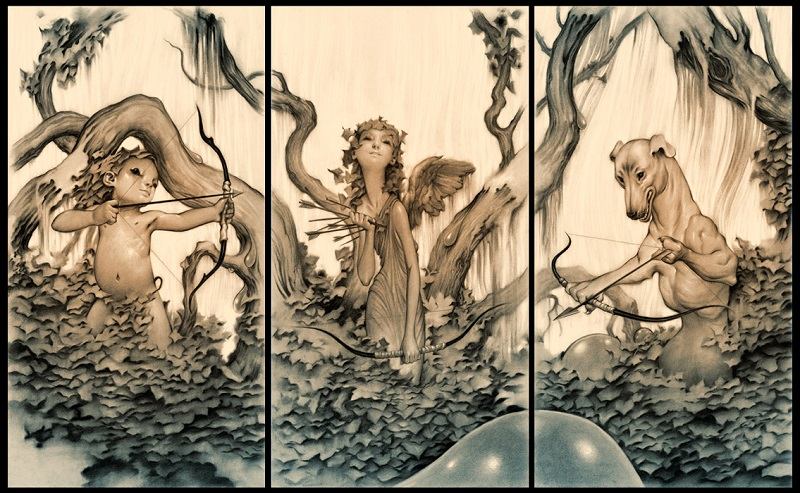

Michael Kutsche

If you’ve watched Thor, John Carter or Oz the Great and Powerful, then you’re already familiar with Michael Kutsche’s work. Kutsche was born in Germany in 1970, and like many other illustrators, began drawing and illustrating at an early age. As his work demonstrates, Kutsche has never lacked imagination or the skill to turn his ideas into awesome otherworldly creatures.

Kutsche got his first big break as part of the design team for Tim Burton’s “Alice in Wonderland”, which was released in 2010. The self-taught German illustrator works in both traditional and digital media, usually drawing out his concepts before incorporating digital elements. His work with high-profile blockbuster hits has earned him worldwide acclaim.

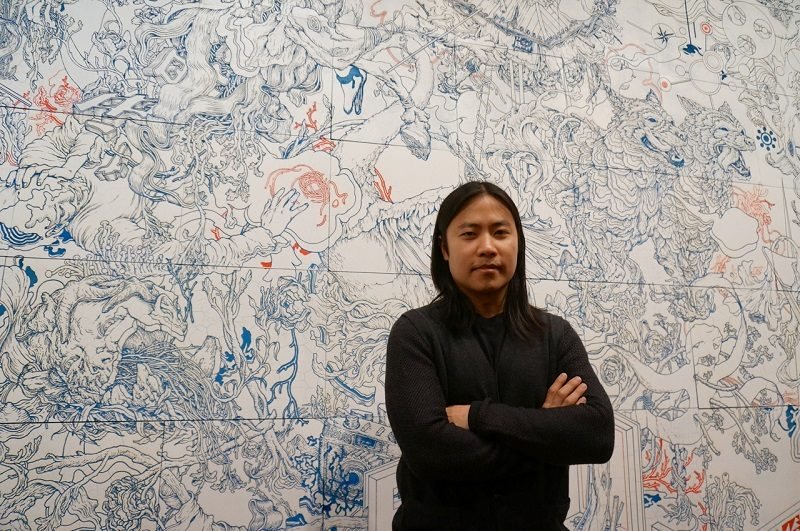

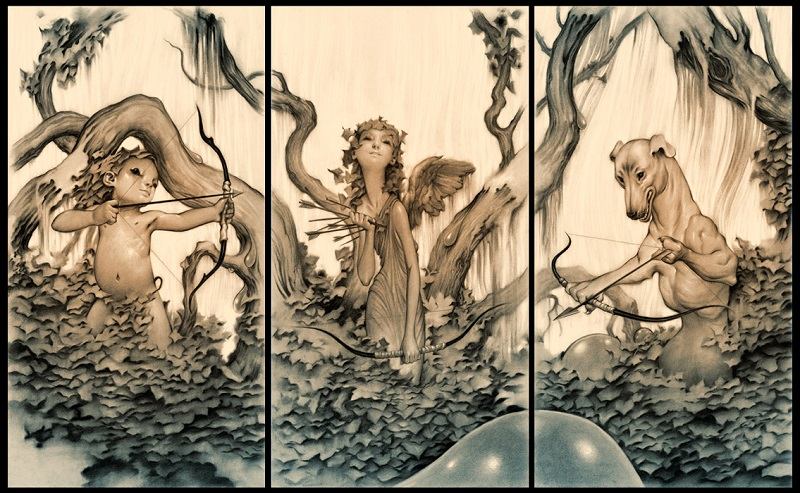

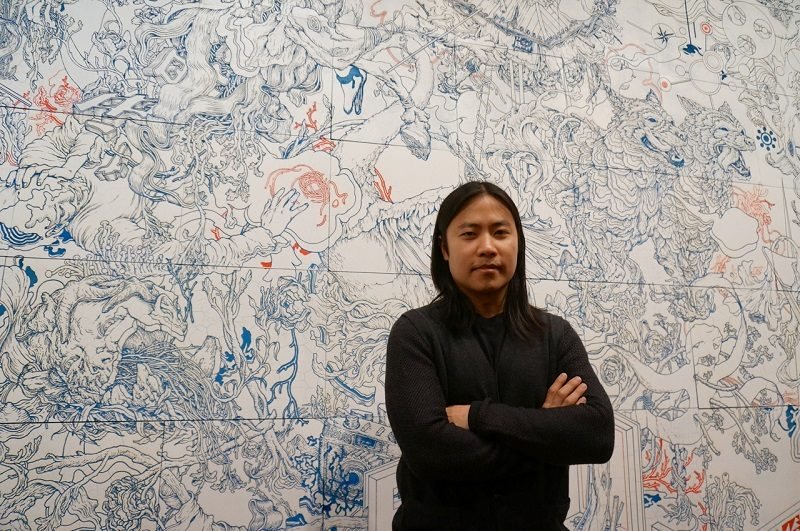

James Jean

James Jean is an Indonesian-American artist known for both his popular commercial illustrations and his fine art gallery work. Jean was born in Taiwan in 1979 and raised in New Jersey. In 2001, he graduated from The School of Visual Arts and began his uber-successful career working with companies like Prada, Atlantic Records and DC Comics.

Many critics and fans now recognize Jean as one of the industry’s best illustrators. His style is characterized by unique, ethereal energy and sophisticated compositions. Jean often incorporates curvilinear lines and the wet media effect to complement the unusual perspectives in his work.

Brian Despain

Once you become familiar with Brian Despain’s smooth, antique illustration style, you’ll be able to pick out his artwork from a mile away. Despain loved art from an early age and spent much of his younger years daydreaming and drawing. Truly a sign of the technological times in which we live, Despain first learned to paint digitally, and only recently began painting with oils.

Despain has been particularly successful in the video game industry, though as a professional concept illustrator he works on a variety of projects. His depiction of robots and his use of a muted palette—browns, tans, and navy blues are his go-to color scheme—have carved out a unique illustration style that’s garnered him well-deserved critical and commercial acclaim.

Zutto

Zutto, whose real name is Alexandra Zutto, is a self-taught freelance illustrator based out of Russia. Using Adobe Illustrator, she creates colorful, playful scenes straight from her imagination, hoping to use her art to communicate with the outside world. Zutto also publishes works-in-progress as a sort of tutorial, allowing fans to see an in-depth look at her artistic process.

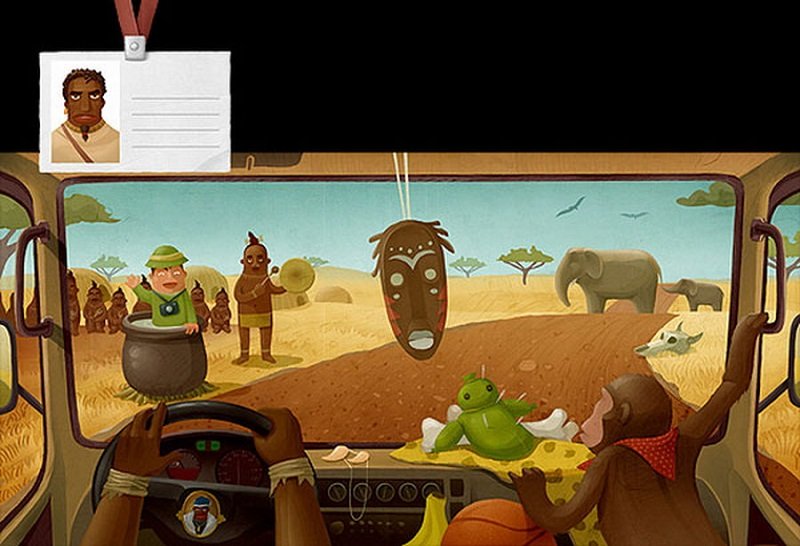

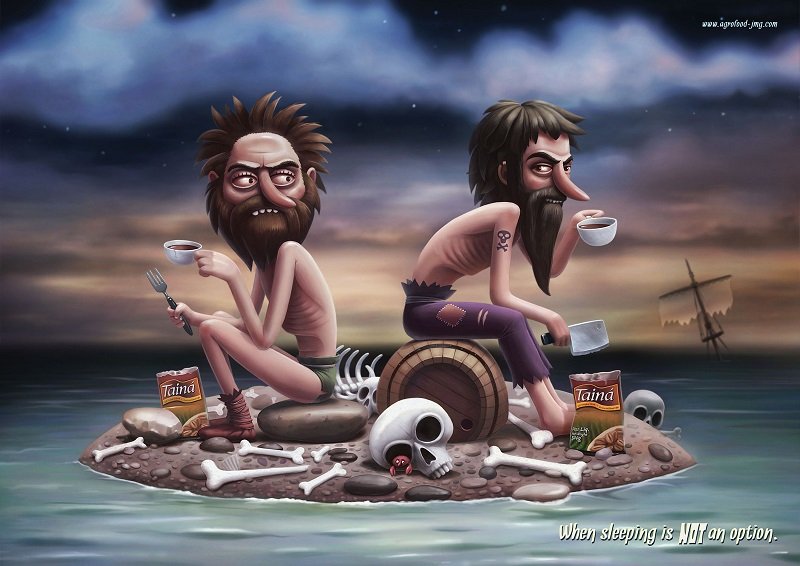

Andrey Gordeev

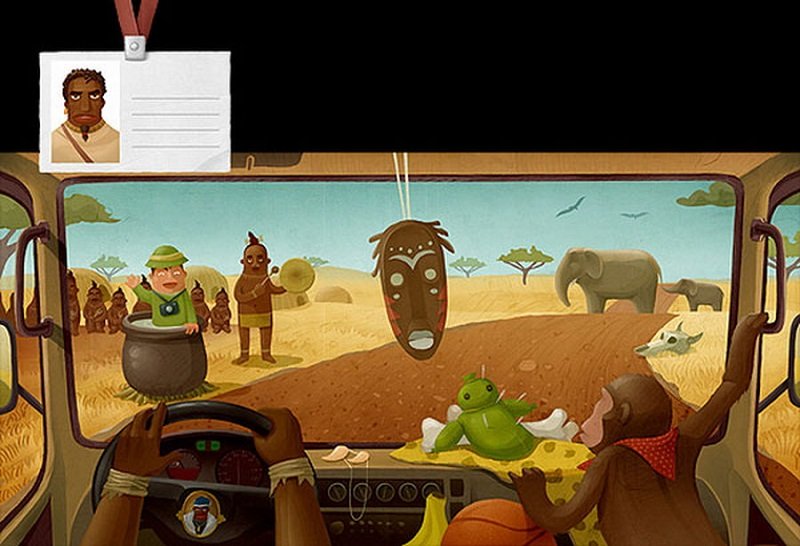

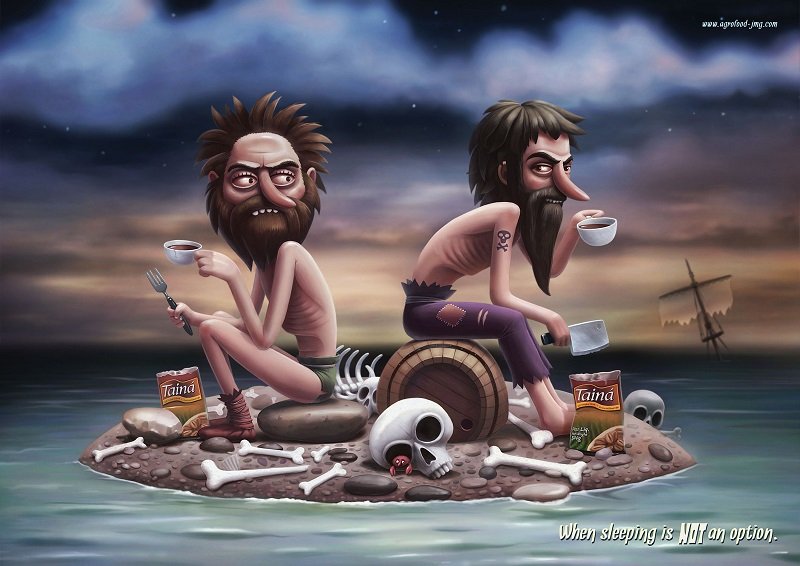

Andrey Gordeev is a Russian artist whose distinct style is easily recognizable. As evident from his work, Gordeev rarely takes himself seriously—his digital illustrations are colorful, fun and often humorous.

In one of his most popular series, Around the World in 12 Months, Gordeev drew a series of truck drivers from all over the world for a corporate calendar.